Deploying Netsuite scripts and objects has traditionally been somewhat troublesome. The situation is fast changing, and for the better. While not perfect, it’s now possible to fully automate your Netsuite testing and deployments. This post will run through one of the many ways to achieve this. We’ll describe the process, preview some of the code, and include a video demo at the end.

The way you set thing up depends largely on whether you maintain a monorepo for all Netsuite scripts, as opposed to multiple repositories. This solution was built for one repository with all Netsuite features grouped into their own directory.

What you will need:

- Version control platform (we’re using Gitlab)

- CI/CD tool for orchestrating testing and deployment (we’re using Gitlab)

- Machine image to host and run SDF(we’re using Docker)

- Testing Framework (we’re using JEST, ships with SDF out of the box)

- Helper scripts for facilitating the process of incremental deployments (we’re using Node)

GitLab CI/CD

We’re hosting the repository on GitLab and using their suite of CI/CD tools to manage the entire process from feature creation to testing and deployment.

The goal is to create an environment where developers commit their Netsuite scripts and objects to a feature branch, and have those files automatically tested and deployed against specific Netsuite accounts, upon them signalling deploy intent, through creating and merging pull requests.

GitLab have a feature called Merge Train which we’re using for tighter control over our deployment pipeline, namely ensuring that our Netsuite code remains in complete sync with our repository branches. Merge trains come very much in handy in this regard as you will see.

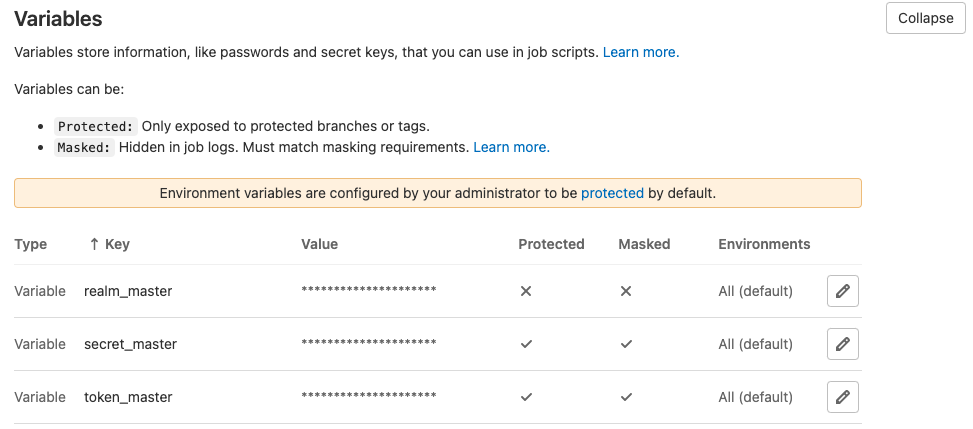

We’ve also created some environment variables in our GitLab CI/CD settings, to hold the credentials needed by SDF to authenticate against each Netsuite account. One setup could be to create a branch for each Netsuite account we’d like to deploy to. For example:

- master branch -> Netsuite production

- sandbox branch -> Netsuite sandbox

In which case we’ll have a set of variables per account/branch combo like [credential]_[branch]

Docker Image

We’ve gone ahead and created a docker image called node-sdf built on the lightweight Linux Alpine, shipping with all dependencies required for running the node version of SDF.

Our image looks something like the following and weighs a whopping ~300mb. You could inspect the size of a docker image by running docker inspect with pretty print like: docker inspect image-name | jq.

FROM alpine:3.14

RUN apk add --no-cache bash

RUN apk add --update npm

RUN apk add git

RUN apk add openjdk11

RUN npm install -g @oracle/suitecloud-cli

CMD ["/bin/bash"]

We’re simply layering Bash, Git, Node and the JDK required by SDF on our base image. We’ve also chosen to integrate the npm package itself right into our container.

SDF project

We’ve simply started a new account customisation with the JEST boilerplate included in the project by running sdf project:create -i.

We have a suitelet script under FileCabinet/SuiteScripts like:

/**

* @NApiVersion 2.1

* @NScriptType Suitelet

* @NModuleScope SameAccount

*/

define([], () => {

function onRequest({ request, response }) {

try {

if (request.method === "GET") log.debug("get request", "received");

else log.debug("post request", "received");

} catch (err) {

log.error("Error", err);

}

}

return {

onRequest,

};

});

and it’s corresponding deployment object at /Objects/ like:

<suitelet scriptid="customscript_testsl">

<description></description>

<isinactive>F</isinactive>

<name>Test Suitelet</name>

<notifyadmins>F</notifyadmins>

<notifyemails></notifyemails>

<notifyowner>T</notifyowner>

<notifyuser>F</notifyuser>

<scriptfile>[/SuiteScripts/test/testsl.js]</scriptfile>

<scriptdeployments>

<scriptdeployment scriptid="customdeploy_testsl">

<allemployees>F</allemployees>

<allpartners>F</allpartners>

<allroles>T</allroles>

<audslctrole></audslctrole>

<eventtype></eventtype>

<isdeployed>T</isdeployed>

<isonline>F</isonline>

<loglevel>DEBUG</loglevel>

<runasrole>ADMINISTRATOR</runasrole>

<status>RELEASED</status>

<title>Test Suitelet</title>

</scriptdeployment>

</scriptdeployments>

</suitelet>

Helpers

We’re using a couple of scripts to help us:

- authenticate with Netsuite as part of the deployment process using

suitecloud account:savetoken - construct the deploy.xml markup dynamically based on the difference between source branch (feature branch) and target branch (master in this example)

The first called sdfauth.js retrieves the Netsuite SDF credentials based on what target branch we’re merging into. It then uses Node’s child_process module to spawn a shell, and execute the suitecloud command for establishing a connection to our Netsuite account.

const { exec } = require("child_process");

const realmEnvVar = `realm_${process.env.CI_MERGE_REQUEST_TARGET_BRANCH_NAME}`;

const realm = process.env[realmEnvVar];

const tokenEnvVar = `token_${process.env.CI_MERGE_REQUEST_TARGET_BRANCH_NAME}`;

const token = process.env[tokenEnvVar];

const secretEnvVar = `secret_${process.env.CI_MERGE_REQUEST_TARGET_BRANCH_NAME}`;

const secret = process.env[secretEnvVar];

const authCmd = `suitecloud account:savetoken --account ${realm} --authid "cisdf" --tokenid ${token} --tokensecret ${secret}`;

exec(authCmd, realm, (error, stdout, stderr) => {

if (error) {

console.log(`error: ${error.message}`);

return;

}

if (stderr) {

console.log(`stderr: ${stderr}`);

return;

}

console.log(`stdout: ${stdout}`);

});

The next helper deploy.js is used to build our deploy.xml file based on the git diff, which we pass in as command line arguments like:

node ci/deploy.js $(git diff --name-only --diff-filter=d $CI_MERGE_REQUEST_TARGET_BRANCH_SHA $CI_MERGE_REQUEST_SOURCE_BRANCH_SHA)"

- The CI_MERGE_REQUEST_TARGET_BRANCH_SHA and CI_MERGE_REQUEST_SOURCE_BRANCH_SHA are ready made GitLab environment variables which we can use to compare feature branch with target branch.

- We’re filtering out scripts and objects which have been deleted since we cannot delete using SDF (you could write custom logic to detect file removals and undeploy said scripts by setting the isDeployed flag to false on the script object as a workaround)

- To summarise the code below, we’re detecting changed scripts and objects, filtering out any changed files outside of the /FileCabinet/SuiteScripts and /Objects/ folders (these would naturally still be committed to the repo, just not deployed by SDF), and building the deploy.xml markup needed for SDF deployment of our feature.

Here’s the messy blob of code that accomplishes the above:

function deployPathsPrep(modifiedFilePaths) {

console.log("running deploy prep");

const onlyNsFiles = modifiedFilePaths.filter(

(filePath) => filePath.includes("/SuiteScripts/") || filePath.includes("/Objects/")

);

return onlyNsFiles.map((nsFile) => {

const extension = nsFile.split(".");

return {

path: `<path>${nsFile.replace("src", "~")}</path>`,

type: extension[extension.length - 1],

};

});

}

function createDeployFile(filesToDeploy) {

const filesMarkup = filesToDeploy.filter((file) => file.type === "js").map((file) => file.path);

const objectsMarkup = filesToDeploy.filter((file) => file.type === "xml").map((file) => file.path);

const deployContent = `<deploy>

<configuration>

<path>~/AccountConfiguration/*</path>

</configuration>

<files>

${filesMarkup.join("")}

</files>

<objects>

${objectsMarkup.join("")}

</objects>

<translationimports>

<path>~/Translations/*</path>

</translationimports>

</deploy>`;

writeFile(`src/deploy.xml`, deployContent, "utf8", (err) => {

if (err) throw err;

console.log(`created deploy.xml file...${deployContent}`);

});

}

let filesToDeploy = [];

try {

const [, , ...modifiedScripts] = process.argv;

console.log("modified scripts: ", modifiedScripts);

filesToDeploy = deployPathsPrep(modifiedScripts);

console.log("filesToDeploy: ", filesToDeploy);

if (!filesToDeploy.length) {

console.log("No objects to deploy, abandoning...");

process.exit(0);

}

filesToDeploy = deployPathsPrep(modifiedScripts);

createDeployFile(filesToDeploy);

} catch (err) {

console.error("Something went wrong creating deploy file holmes: ", err);

process.exit(1);

}

Pipeline Config

Our GitLab pipeline:

- is setup to run 2 kinds of deployment flows, each sequenced in it’s own stage.

- stage validate will be triggered whenever a merge request is opened or updated

$CI_MERGE_REQUEST_EVENT_TYPE == "merged_result" - stage deploy will be triggered whenever a merge request is merged

$CI_MERGE_REQUEST_EVENT_TYPE == "merge_train"

Stage validate will:

- detect file changes

- run project unit tests

- create the deploy.xml file

- create a connection to the target Netsuite account

- add project dependencies

- validate the project

- and finally run a preview of the deployment to test whether our project is set up correctly. This is achieved using

suitecloud project:deploy --dryrunand solves the issue of atomic deployments.

Stage deploy will do the exact same thing, but will omit the --dryrun flag and actually deploy the project to the Netsuite account.

If the feature branch should fail SDF validation, or encounter an error upon deploying to Netsuite, our merge request would remain open and unmerged, to be fixed and retried at a later stage.

Here’s the pipeline config file .gitlab-ci.yml

image: suitehearts/node-sdf

before_script:

- npm ci

- printenv

stages:

- validate

- deploy

validate:

stage: validate

script:

- npm run test

- npm run deployfile

- npm run validate

only:

refs:

- merge_requests

variables:

- $CI_MERGE_REQUEST_TARGET_BRANCH_NAME == "master" && $CI_MERGE_REQUEST_EVENT_TYPE == "merged_result"

deploy:

stage: deploy

script:

- npm run test

- npm run deployfile

- npm run deploy

only:

refs:

- merge_requests

variables:

- $CI_MERGE_REQUEST_TARGET_BRANCH_NAME == "master" && $CI_MERGE_REQUEST_EVENT_TYPE == "merge_train"

Package.json

Here are the npm scripts being used by the pipeline to accomplish the task of deploying.

"name": "suitecloud-project",

"version": "1.0.0",

"scripts": {

"test": "jest",

"deployfile": "node ci/deploy.js $(git diff --name-only --diff-filter=d $CI_MERGE_REQUEST_TARGET_BRANCH_SHA $CI_MERGE_REQUEST_SOURCE_BRANCH_SHA)",

"validate": "if [ -s src/deploy.xml ]; then npm run sdfdeploysoft; else echo \"no files/objects to deploy\"; fi;",

"deploy": "if [ -s src/deploy.xml ]; then npm run sdfdeploy; else echo \"no files/objects to deploy\"; fi;",

"sdfdeploysoft": "node ci/sdfauth.js && suitecloud project:adddependencies && suitecloud project:validate --server && suitecloud project:deploy --dryrun",

"sdfdeploy": "node ci/sdfauth.js && suitecloud project:adddependencies && suitecloud project:validate --server && suitecloud project:deploy",

"deploylocal": "node ci/deploy.js $(git diff --name-only --diff-filter=d $(git rev-parse origin/master) $(git rev-parse HEAD@{0}))"

},

"devDependencies": {

"jest": "^27.0.6",

"@oracle/suitecloud-unit-testing": "^1.1.3"

}

}

Here is a demo of the above in action:

Feedback using comments below. We also welcome any enquiries by hitting that Contact Us button. We could also be reached by email if you prefer at info AT suitehearts DOT net